本文目录

概述

在SAP NetWeaver高可用系统中需要将ASCS和ERS的安装到外部共享存储上,方便集群故障迁移。此时需要引入一个高可用的NFS文件服务器,正是本文的配置重点。

在微软最新的文档《High availability for NFS on Azure VMs on SUSE Linux Enterprise Server》中可以使用两台机器互为备份为两个SAP 系统提供文件服务。但我的当前部署中只有1个SAP系统,所以只需配置NW1。

主要配置参数

- 集群的Pacemaker的使用的是:SBD设备,文件的复制同步使用的:DRBD。

- 本次配置涉及两台虚拟机,分别是:nfs01,fns02。

- NFS负载均衡器的前端地址为:vnfs01,探针端口为:16000。

- NFS集群的虚拟IP地址为:10.0.0.10.

注意:和官方文档保持一致,操作步骤前缀分别代表:

- [A] – 两台机器都需要执行

- [1] – 只需在结点1上执行

- [2] – 只需在结点2上执行

创建Pacemaker集群

设置SBD设备

[A] 连接到 iSCSI 设备

启用iSCSI 和 SBD 设备:

nfs01:~ # sudo systemctl enable iscsid Created symlink from /etc/systemd/system/multi-user.target.wants/iscsid.service to /usr/lib/systemd/system/iscsid.service.

nfs01:~ # sudo systemctl enable iscsi

nfs01:~ # sudo systemctl enable sbd Created symlink from /etc/systemd/system/corosync.service.requires/sbd.service to /usr/lib/systemd/system/sbd.service.

[1] 在第一个节点上更改发起程序名称

nfs01:~ # sudo vi /etc/iscsi/initiatorname.iscsi

更改InitiatorName的值为:

InitiatorName=iqn.2006-04.nfs01.local:nfs01

[2] 在第二个节点上更改发起程序名称

nfs02:~ # sudo vi /etc/iscsi/initiatorname.iscsi

更改InitiatorName的值为:

InitiatorName=iqn.2006-04.nfs02.local:nfs02

[A] 重新启动 iSCSI 服务

重启iSCSI 服务加载配置

nfs01:~ # sudo systemctl restart iscsid

nfs01:~ # sudo systemctl restart iscsi

连接iSCSI设备。10.0.0.51是目标服务器的地址;端口为:3260;NFS集群对应SBD设备名称为:iqn.2006-04.vnfs01.local:vnfs01。

nfs01:~ # sudo iscsiadm -m discovery --type=st --portal=10.0.0.51:3260 10.0.0.51:3260,1 iqn.2006-04.vnfs01.local:vnfs01 10.0.0.51:3260,1 iqn.2006-04.vascs01.local:vascs01 10.0.0.51:3260,1 iqn.2006-04.vhana01.local:vhana01

nfs01:~ # sudo iscsiadm -m node -T iqn.2006-04.vnfs01.local:vnfs01 --login --portal=10.0.0.51:3260

Logging in to [iface: default, target: iqn.2006-04.vnfs01.local:vnfs01, portal: 10.0.0.51,3260] (multiple)

Login to [iface: default, target: iqn.2006-04.vnfs01.local:vnfs01, portal: 10.0. 0.51,3260] successful.

nfs01:~ # sudo iscsiadm -m node -p 10.0.0.51:3260 --op=update --name=node.startup --value=automatic

确保iSCSI 设备可用,并标注对应的设备名称,后面步骤会用到:

nfs01:~ # lsscsi | grep vnfs01

[6:0:0:0] disk LIO-ORG vnfs01 4.0 /dev/sdd

现在根据设备名称获取设备ID

nfs01:~ # ls -l /dev/disk/by-id/scsi-* | grep .*3600.*sdd lrwxrwxrwx 1 root root 9 Apr 28 02:03 /dev/disk/by-id/scsi-36001405cb3ac7b3fb5547f8a2a3882b6 -> ../../sdd

以 scsi-3开头的设备就是我们的目标设备ID:/dev/disk/by-id/scsi-36001405cb3ac7b3fb5547f8a2a3882b6

[1] 创建 SBD 设备

nfs01:~ # sudo sbd -d /dev/disk/by-id/scsi-36001405cb3ac7b3fb5547f8a2a3882b6 -1 10 -4 20 create

Initializing device /dev/disk/by-id/scsi-36001405cb3ac7b3fb5547f8a2a3882b6

Creating version 2.1 header on device 4 (uuid: d8957ff4-3a0d-4f5e-8004-59e79a82b06b)

Initializing 255 slots on device 4

Device /dev/disk/by-id/scsi-36001405cb3ac7b3fb5547f8a2a3882b6 is initialized.

[A] 适配 SBD 配置

打开SBD配置文件:

nfs01:~ # sudo vi /etc/sysconfig/sbd

填写SBD_DEVICE的值,并确保SBD_PACEMAKER=yes,SBD_STARTMODE=always,形如:

SBD_DEVICE="/dev/disk/by-id/scsi-36001405cb3ac7b3fb5547f8a2a3882b6"

SBD_PACEMAKER=yes

SBD_STARTMODE=always

更新softdog 配置文件

nfs01:~ # echo softdog | sudo tee /etc/modules-load.d/softdog.conf softdog

加载softdog 模块

nfs01:~ # sudo modprobe -v softdog insmod /lib/modules/4.4.114-94.11-default/kernel/drivers/watchdog/softdog.ko

集群安装

[A] 更新 SLES

nfs01:~ # sudo zypper update

[1] 启用 SSH 访问

nfs01:~ # sudo ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:9hrDykShU0qFv5JzgfPaBnWP7MWjzdDx1VwIF9PtuX4 root@nfs01 The key's randomart image is: +---[RSA 2048]----+ | .. ..++.| | .. ...+| | .oo +o| | .o++.. . ..+| | +=.=S= o . .| | =o+o+.* . . | | B..+*.. . | | .oo.o+o .E| | .o . .| +----[SHA256]-----+

复制nfs01的公钥

nfs01:~ # sudo cat /root/.ssh/id_rsa.pub ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDWJZsz6YKz1i8YkubekqYDktdmfjfAH6YI+1SFAgf5pXEQpIodraK0P1UdHMS3TQYdvcf6a4PhW8xm91h+AjfTAiJohixpw4/fWdmZtt17ScM78I4hf4u+nwZyB7PQnfSNff75t23IPcMgLlyu/TfFv2txZWxuCea7VUXhVjR7BDzbWEi5F7Dis06xPrJ2lk0u1zh6puuLUaKwV+hqqI/7rZw+F/zqt8OSr78nnuPQc9ThqN0Y90k9i2Vl7sBYzd0L0NjlJZ5wENN5rpznTeI++MEa+QJA3VqWEPulpgpXewnCakk/MGMHz33seuKlxJhESPW5uQxeIfovO+5pot2/ root@nfs01

[2] 启用 SSH 访问

生成密钥对:

nfs02:~ # sudo ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:iTY/HWUWysKCLZVUGTMpNlRdG5iouHADs8CC36S/XT0 root@nfs02 The key's randomart image is: +---[RSA 2048]----+ |o o++** ++ | |+.o .+=.+++. + | |.o Booo+o o = | | = =..o o + | | + o+ S.. | | o. o..E. | | o .o .. | | . . . | | | +----[SHA256]-----+

复制nfs01的公钥只nfs02的授权密钥文件:

nfs02:~ sudo vi /root/.ssh/authorized_keys

同时查看和复制nfs02的公钥

nfs02:~ # sudo cat /root/.ssh/id_rsa.pub ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC78w15ZHlbshX+1TLJpXSRjk1sL4NgjRYeL5Q7LPgN/tV155QcGbHPoqCjQv2xTCconCqF+EPgWBeGP1/mYeqCVvbEgKYpC14IKd/jGxw31lTSP6Dr267qEltgZeWwdXMALGjVqrj1jB8iYxdoY1oDCddL5MgR51MuuibzoslDCbf5ZJ+UeSoejzOHKL16rwMopvQfVQesVpMgFUfA8yww7QU5U90LpBZopU4t1g6T6cvQN2m3jGpBwZgrTZSQq2cOgvA5wHGFUpoyiI36kcZ9qWRKW6MyHGzGdAPt94TSC+k61t9PrR0Aq97L0uT0wXl+14bv3dIE8I8+QTn4f+NL root@nfs02

[1] 启用 SSH 访问

复制nfs02的公钥至nfs1授权密钥文件:

nfs01:~ # sudo vi /root/.ssh/authorized_keys

[A] 安装 HA 扩展

nfs01:~ # sudo zypper install sle-ha-release fence-agents

[A] 设置主机名称解析

增加host记录:

vi /etc/hosts

10.0.0.11 nfs01 10.0.0.12 nfs02

[1] 安装群集

nfs01:~ # sudo ha-cluster-init ! NTP is not configured to start at system boot. Do you want to continue anyway (y/n)? y /root/.ssh/id_rsa already exists - overwrite (y/n)? n Network address to bind to (e.g.: 192.168.1.0) [10.0.0.0][回车] Multicast address (e.g.: 239.x.x.x) [239.38.88.171][回车] Multicast port [5405] SBD is already configured to use /dev/disk/by-id/scsi-36001405cb3ac7b3fb5547f8a2a3882b6 - overwrite (y/n)? n Hawk cluster interface is now running. To see cluster status, open: https://10.0.0.11:7630/ Do you wish to configure an administration IP (y/n)? n Done (log saved to /var/log/ha-cluster-bootstrap.log)

[2] 向群集添加节点

nfs02:~ # sudo ha-cluster-join Do you want to continue anyway (y/n)? y IP address or hostname of existing node (e.g.: 192.168.1.1) []10.0.0.11 Retrieving SSH keys - This may prompt for root@10.0.0.11: /root/.ssh/id_rsa already exists - overwrite (y/n)? n

[A] 重置hacluster密码

nfs01:~ # sudo passwd hacluster New password: Retype new password: passwd: password updated successfully

[A] 配置corosync 结点列表

编辑corosync配置文件:

nfs01:~ # sudo vi /etc/corosync/corosync.conf

更新transport,nodelist,expected_votes,two_node的值,

# Please read the corosync.conf.5 manual page

totem {

version: 2

secauth: on

crypto_hash: sha1

crypto_cipher: aes256

cluster_name: hacluster

clear_node_high_bit: yes

token: 5000

token_retransmits_before_loss_const: 10

join: 60

consensus: 6000

max_messages: 20

interface {

ringnumber: 0

bindnetaddr: 10.0.0.0

mcastaddr: 239.90.36.91

mcastport: 5405

ttl: 1

}

transport: udpu

}

nodelist {

node {

# nfs01

ring0_addr:10.0.0.11

}

node {

# nfs02

ring0_addr:10.0.0.12

}

}

logging {

fileline: off

to_stderr: no

to_logfile: no

logfile: /var/log/cluster/corosync.log

to_syslog: yes

debug: off

timestamp: on

logger_subsys {

subsys: QUORUM

debug: off

}

}

quorum {

# Enable and configure quorum subsystem (default: off)

# see also corosync.conf.5 and votequorum.5

provider: corosync_votequorum

expected_votes: 2

two_node: 1

}

重启corosync 服务

nfs01:~ # sudo service corosync restart

[1] 修改pacemaker的默认设置

nfs01:~ # sudo crm configure rsc_defaults resource-stickiness="1"

配置NFS服务器

[A]设置域名解析

编辑host文件,增加虚拟IP对应的主机名记录,当前系统已有域名解析,可以略过。

nfs01:~ # sudo vi /etc/hosts

输入:

vnfs01 10.0.0.10

[A] 启用 NFS 服务器

nfs01:~ # sudo sh -c 'echo /srv/nfs/ *\(rw,no_root_squash,fsid=0\)>/etc/exports'

nfs01:~ # sudo mkdir /srv/nfs/

nfs01:~ # sudo systemctl enable nfsserver Created symlink from /etc/systemd/system/multi-user.target.wants/nfsserver.service to /usr/lib/systemd/system/nfsserver.service.

nfs01:~ # sudo service nfsserver restart

[A] 安装 drbd 组件

nfs01:~ # sudo zypper install drbd drbd-kmp-default drbd-utils

[A] 为 drbd 设备创建分区

查询数据盘

nfs01:~ # sudo ls /dev/disk/azure/scsi1/ lun0

为数据盘创建分区

nfs01:~ # sudo sh -c 'echo -e "n\n\n\n\n\nw\n" | fdisk /dev/disk/azure/scsi1/lun0'

Welcome to fdisk (util-linux 2.29.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0x8515ae03.

Command (m for help): Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p):

Using default response p.

Partition number (1-4, default 1): First sector (2048-268435455, default 2048): Last sector, +sectors or +size{K,M,G,T,P} (2048-268435455, default 268435455):

Created a new partition 1 of type 'Linux' and of size 128 GiB.

Command (m for help): The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

[A] 创建 LVM 配置

查询可用的分区:

nfs01:~ # ls /dev/disk/azure/scsi1/lun*-part* /dev/disk/azure/scsi1/lun0-part1

为分区创建LVM卷

nfs01:~ # sudo pvcreate /dev/disk/azure/scsi1/lun0-part1 Physical volume "/dev/sdc1" successfully created

nfs01:~ # sudo vgcreate vg-NW1-NFS /dev/disk/azure/scsi1/lun0-part1 Volume group "vg-NW1-NFS" successfully created

nfs01:~ # sudo lvcreate -l 100%FREE -n NW1 vg-NW1-NFS Logical volume "NW1" created.

[A] 配置 drbd

nfs01:~ # sudo vi /etc/drbd.conf

确保配置文件中包含以下两行内容:

include "drbd.d/global_common.conf"; include "drbd.d/*.res";

更新DRBD全局配置

nfs01:~ # sudo vi /etc/drbd.d/global_common.conf

分别在handlers和net结点加入如下配置:

global {

usage-count no;

}

common {

handlers {

fence-peer "/usr/lib/drbd/crm-fence-peer.sh";

after-resync-target "/usr/lib/drbd/crm-unfence-peer.sh";

split-brain "/usr/lib/drbd/notify-split-brain.sh root";

pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

}

startup {

wfc-timeout 0;

}

options {

}

disk {

resync-rate 50M;

}

net {

after-sb-0pri discard-younger-primary;

after-sb-1pri discard-secondary;

after-sb-2pri call-pri-lost-after-sb;

}

}

[A] 创建 NFS drbd 设备

为DRBD设备增加配置

nfs01:~ # sudo vi /etc/drbd.d/NW1-nfs.res

输入:

resource NW1-nfs {

protocol C;

disk {

on-io-error detach;

}

on nfs01 {

address 10.0.0.11:7790;

device /dev/drbd0;

disk /dev/vg-NW1-NFS/NW1;

meta-disk internal;

}

on nfs02 {

address 10.0.0.12:7790;

device /dev/drbd0;

disk /dev/vg-NW1-NFS/NW1;

meta-disk internal;

}

}

创建DRBD设备,并启动:

nfs01:~ # sudo drbdadm create-md NW1-nfs --== Thank you for participating in the global usage survey ==-- The server's response is: you are the 13007th user to install this version initializing activity log initializing bitmap (4096 KB) to all zero Writing meta data... New drbd meta data block successfully created. success

nfs01:~ # sudo drbdadm up NW1-nfs --== Thank you for participating in the global usage survey ==-- The server's response is: you are the 2612th user to install this version

[1] 跳过初始同步

nfs01:~ # sudo drbdadm new-current-uuid --clear-bitmap NW1-nfs

[1] 设置主节点

nfs01:~ # sudo drbdadm primary --force NW1-nfs

[1] 等待新的 drbd 设备完成同步

nfs01:~ # sudo drbdsetup wait-sync-resource NW1-nfs

nfs01:~ # drbd-overview 0:NW1-nfs/0 Connected(2*) Primar/Second UpToDa/UpToDa

[1] 在 drbd 设备上创建文件系统

nfs01:~ # sudo mkfs.xfs /dev/drbd0

meta-data=/dev/drbd0 isize=256 agcount=4, agsize=8388094 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0, sparse=0

data = bsize=4096 blocks=33552375, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=16382, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

nfs01:~ # sudo mkdir /srv/nfs/NW1 nfs01:~ # sudo chattr +i /srv/nfs/NW1 nfs01:~ # sudo mount -t xfs /dev/drbd0 /srv/nfs/NW1

nfs01:~ # sudo mkdir /srv/nfs/NW1/sidsys nfs01:~ # sudo mkdir /srv/nfs/NW1/sapmntsid nfs01:~ # sudo mkdir /srv/nfs/NW1/trans

nfs01:~ # sudo mkdir /srv/nfs/NW1/ASCS nfs01:~ # sudo mkdir /srv/nfs/NW1/ASCSERS nfs01:~ # sudo mkdir /srv/nfs/NW1/SCS nfs01:~ # sudo mkdir /srv/nfs/NW1/SCSERS nfs01:~ # sudo umount /srv/nfs/NW1

配置集群框架

[1] 为 SAP 系统 NW1 向群集配置中添加 NFS drbd 设备

因为资源名和官网中的例子保持了一致,所以只需替换虚拟IP地址和子网掩码为:10.0.0.10,和 24即可。

sudo crm configure property maintenance-mode=true

sudo crm configure primitive drbd_NW1_nfs \

ocf:linbit:drbd \

params drbd_resource="NW1-nfs" \

op monitor interval="15" role="Master" \

op monitor interval="30" role="Slave"

sudo crm configure ms ms-drbd_NW1_nfs drbd_NW1_nfs \

meta master-max="1" master-node-max="1" clone-max="2" \

clone-node-max="1" notify="true" interleave="true"

sudo crm configure primitive fs_NW1_sapmnt \

ocf:heartbeat:Filesystem \

params device=/dev/drbd0 \

directory=/srv/nfs/NW1 \

fstype=xfs \

op monitor interval="10s"

sudo crm configure primitive exportfs_NW1 \

ocf:heartbeat:exportfs \

params directory="/srv/nfs/NW1" \

options="rw,no_root_squash" clientspec="*" fsid=1 wait_for_leasetime_on_stop=true op monitor interval="30s"

sudo crm configure primitive exportfs_NW1_sidsys \

ocf:heartbeat:exportfs \

params directory="/srv/nfs/NW1/sidsys" \

options="rw,no_root_squash" clientspec="*" fsid=2 wait_for_leasetime_on_stop=true op monitor interval="30s"

sudo crm configure primitive exportfs_NW1_sapmntsid \

ocf:heartbeat:exportfs \

params directory="/srv/nfs/NW1/sapmntsid" \

options="rw,no_root_squash" clientspec="*" fsid=3 wait_for_leasetime_on_stop=true op monitor interval="30s"

sudo crm configure primitive exportfs_NW1_trans \

ocf:heartbeat:exportfs \

params directory="/srv/nfs/NW1/trans" \

options="rw,no_root_squash" clientspec="*" fsid=4 wait_for_leasetime_on_stop=true op monitor interval="30s"

sudo crm configure primitive exportfs_NW1_ASCS \

ocf:heartbeat:exportfs \

params directory="/srv/nfs/NW1/ASCS" \

options="rw,no_root_squash" clientspec="*" fsid=5 wait_for_leasetime_on_stop=true op monitor interval="30s"

sudo crm configure primitive exportfs_NW1_ASCSERS \

ocf:heartbeat:exportfs \

params directory="/srv/nfs/NW1/ASCSERS" \

options="rw,no_root_squash" clientspec="*" fsid=6 wait_for_leasetime_on_stop=true op monitor interval="30s"

sudo crm configure primitive exportfs_NW1_SCS \

ocf:heartbeat:exportfs \

params directory="/srv/nfs/NW1/SCS" \

options="rw,no_root_squash" clientspec="*" fsid=7 wait_for_leasetime_on_stop=true op monitor interval="30s"

sudo crm configure primitive exportfs_NW1_SCSERS \

ocf:heartbeat:exportfs \

params directory="/srv/nfs/NW1/SCSERS" \

options="rw,no_root_squash" clientspec="*" fsid=8 wait_for_leasetime_on_stop=true op monitor interval="30s"

sudo crm configure primitive vip_NW1_nfs \

IPaddr2 \

params ip=10.0.0.10 cidr_netmask=24 op monitor interval=10 timeout=20

sudo crm configure primitive nc_NW1_nfs \

anything \

params binfile="/usr/bin/nc" cmdline_options="-l -k 61000" op monitor timeout=20s interval=10 depth=0

sudo crm configure group g-NW1_nfs \

fs_NW1_sapmnt exportfs_NW1 exportfs_NW1_sidsys \

exportfs_NW1_sapmntsid exportfs_NW1_trans exportfs_NW1_ASCS \

exportfs_NW1_ASCSERS exportfs_NW1_SCS exportfs_NW1_SCSERS \

nc_NW1_nfs vip_NW1_nfs

sudo crm configure order o-NW1_drbd_before_nfs inf: \

ms-drbd_NW1_nfs:promote g-NW1_nfs:start

sudo crm configure colocation col-NW1_nfs_on_drbd inf: \

g-NW1_nfs ms-drbd_NW1_nfs:Master

[1]停用维护模式

nfs01:~ # sudo crm configure property maintenance-mode=false

创建STONITH设备

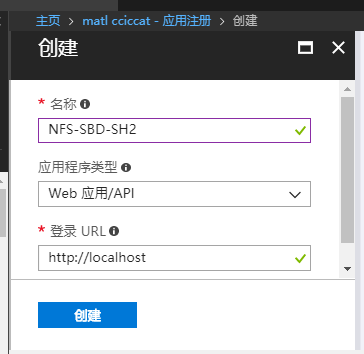

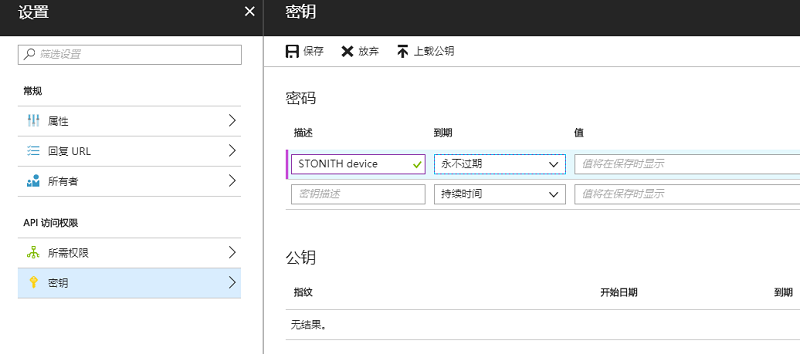

创建一个Azure Active Directory 应用

请在创建的过程中记录以下四个参数的值,后面会用到:

|

Subscription Id |

订阅Id |

|

Tenant id |

租户Id |

|

Application Id |

应用Id |

|

Auth Key |

授权秘钥 |

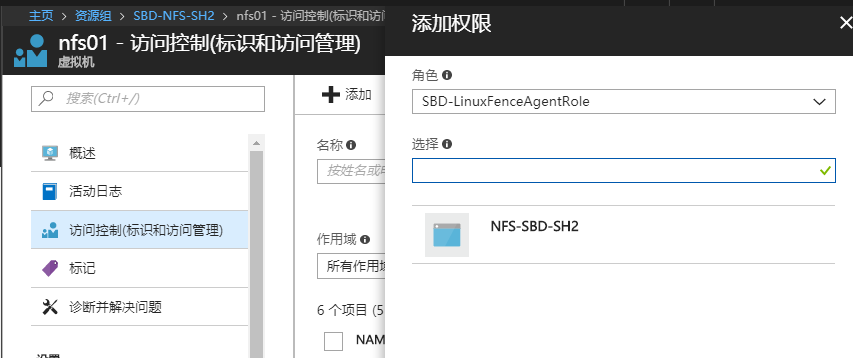

为Fence代理创建自定义角色

在最早的配置NFS的微软在线文档中,直接使用所有者角色进行授权,2018年3月份的文档更新以后,使用自定义角色进行授权。自定义角色中,只定义一个开机和关机权限,这样更安全。

创建自定义角色,我使用的是PowerShell,这个是在本地的Windows机器中完成的:

创建自定义角色定义文件

PowerShell打开文本编辑器,

PS> notepad customrole.json

输入:

{

"Name": "SBD-LinuxFenceAgentRole",

"Id": null,

"IsCustom": true,

"Description": "Allows to deallocate and start virtual machines",

"Actions": [

"Microsoft.Compute/*/read",

"Microsoft.Compute/virtualMachines/deallocate/action",

"Microsoft.Compute/virtualMachines/start/action"

],

"NotActions": [

],

"AssignableScopes": [

"/subscriptions/你的订阅Id"

]

}

登陆Azure账号

PS> Login-AzureRmAccount -EnvironmentName AzureChinaCloud

选择订阅

Set-AzureRmContext -SubscriptionId 你的订阅Id

创建角色

PS> New-AzureRmRoleDefinition -InputFile .\customrole.json

Name : SBD-LinuxFenceAgentRole

Id : xxxx-xxxxx-xxxx

IsCustom : True

Description : Allows to deallocate and start virtual machines

Actions : {Microsoft.Compute/*/read, Microsoft.Compute/virtualMachines/deallocate/action,

Microsoft.Compute/virtualMachines/start/action}

NotActions : {}

AssignableScopes : {/subscriptions/你的订阅Id}

将自定义角色加入到机器的访问控制列表中

创建STONITH 设备

nfs01:~ # sudo crm configure property stonith-timeout=900 nfs01:~ # sudo crm configure primitive rsc_st_azure stonith:fence_azure_arm \ params subscriptionId="你的订阅Id" resourceGroup="SBD-NFS-SH2" tenantId="你的租户Id" login="AAD 应用Id" passwd="授权秘钥"

为SBD Fencing创建fence拓扑

nfs01:~ # sudo crm configure fencing_topology \ stonith-sbd rsc_st_azure

启用STONITH设备

nfs01:~ # sudo crm configure property stonith-enabled=true

场景测试

文件挂载测试

我们可以把sbd01当做客户端通过虚拟IP对应的vnfs01进行NFS挂载。

1.创建本地文件夹:

sbd01:~ # mkdir /myfile

2.挂载NFS

sbd01:~ # sudo mount -t nfs4 vnfs01:/ /myfile/

3.查看NFS文件内容

sbd01:~ # ls /myfile/NW1 .rmtab ASCS ASCSERS SCS SCSERS sapmntsid sidsys trans

3.确保当前NFS的活动结点,我的是nfs01

nfs01:~ # crm_mon -1 | grep exportfs_NW1_sapmntsid

exportfs_NW1_sapmntsid (ocf::heartbeat:exportfs): Started nfs01

4.在nfs01上关闭pacemaker服务,确保sbd01上挂载的NFS文件仍旧能够访问:

sbd01:~ # ls /myfile/ NW1

fencing 故障转移测试

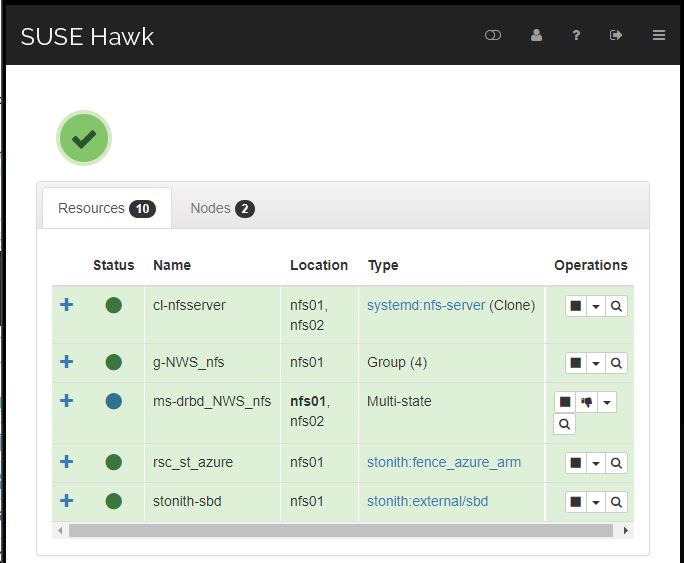

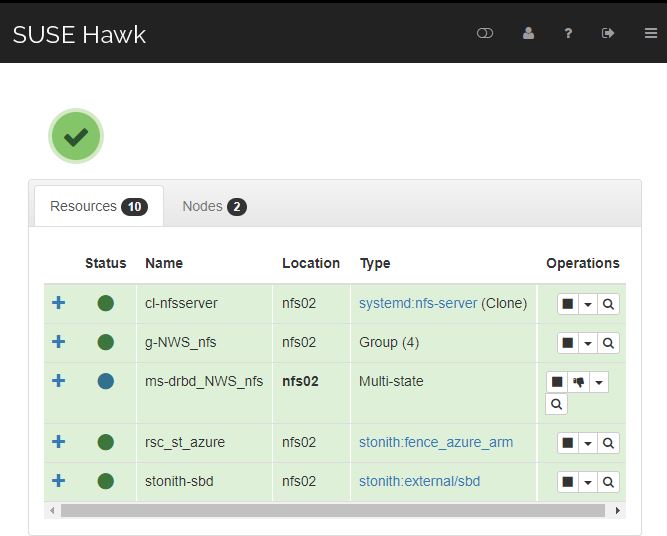

NFS集群配置结束以后,在机器控制台上,我们可以看到NFS工作nfs01上:

然后我们在nfs01上,禁用网卡:

nfs01:~ # sudo ifdown eth0

这样就会导致nfs01进入失联状态,期望集群能把nfs01拉出,工作流转移到nfs02上:

实际结果和期望结果一致,而且故障转移时间再1分钟以内。

请尊重原作者和编辑的辛勤劳动,欢迎转载,并注明出处!